To the average person, packet loss seems like a technical term with little to no meaning. For the UC engineer or IT professional, it’s a familiar term that most associate with one key desire: learning how to troubleshoot it faster and with more confidence. So, what exactly is packet loss?

In a phone call, you send packets of data across a computer network which are measured on the receiving end. If at any point one of those packets of data traveling across the network fails to reach its’ destination (e.g., the other person’s phone), then packet loss is experienced. Knowing that everyone can experience packet loss, monitoring and troubleshooting it should become less of a specialty request and more of a crucial tool to every unified communications professional.

Ready to resolve video call issues faster? Check out our best ways to troubleshoot a video call.

How much packet loss is acceptable?

In general, the answer to this question is that it depends on the medium. When it comes to presentation scenarios such as screen sharing, there’s less of a paramount need for a perfect quality connection. The tradeoff in packet loss will have a less profound impact on the presentation experience so a reasonable amount of packet loss could be 5-8%. However, with modalities like audio and video, packet loss will have a much more dramatic impact on the experience of the call. Any significant packet loss on a voice call can create a significant distraction and the same goes for video. Thus, with voice and video calls, 3-5% packet loss could be considered “acceptable”.

How do you calculate packet loss?

Packet Loss % = (Packets Sent – Packets Received) / Packets Sent

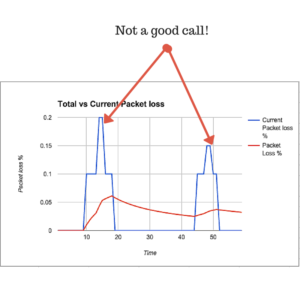

Why does packet loss matter? For starters, packet loss is one of the best ways to understand the dependability and quality of your network. For video, it is very closely tied to the video call quality seen by the participants in a meeting. In practice, packet loss equals frozen screens, delays, and artifacts in the image. Video is very tough traffic for a network, so packet loss is noticeable to humans at 0.5% and annoying at anything greater than 2%. In fact, if packet loss is any greater than 2%, a call might fail completely. Packet loss is also used in quality functions and algorithms to drive changes such as lowering resolution or bandwidth (AKA down speeding). What you don’t know about packet loss Packet loss can be very misleading. Most call reporting mechanisms focus on total packet loss, which for most calls is about as useful as reporting how long the call was. The reason is that packet loss for a one hour call could be 0.1%, yet for five minutes that call experienced 5% packet loss. The person responsible for delivering a high-quality video experience now focuses on nothing other than that for five, someone missed out on an otherwise great collaboration experience rather than the frustration of a video call getting choppy every five seconds.

Rethinking Packet Loss

A more useful metric is current packet loss, or for historical analysis – peak packet loss. When you look at total packet loss, you drastically slant your results in favor of showing all calls having a pleasant experience. This is because the majority of calls lose zero packets most of the time since the network is usually doing a great job of transmitting packets. For the other periods of time where there is packet loss, it should be zeroed in on rather than looked at a whole. Thinking about total packet loss per call is fine if you are perfectly happy with most calls being an ok experience most of the time. But, it’s important to look at the bigger picture and realize no other quality metric is measured this way. There is no “total jitter” or “average resolution” option. The notion of packet loss came about while thinking of the network administrator as the end customer. An admin cares about packet loss for most applications because it means packets have to be resent, which slows down the network, thus slowing down everything else needing to utilize that network. In video, there is no resending. Packet loss means low-quality video, which means frustrated users which means low-adoption rate or worse, in the case of telemedicine, unhappy patients. Measuring current packet loss, not total, would give the end user a much more pleasant experience as opposed to an overall, it was ok call.

When that unfortunate time comes when you experience packet loss, don’t sweat it. Packet loss happens. But, in order to truly help prevent a high percentage of packet loss in the future, implementing a modern packet loss solution, is crucial. Packet loss is frustrating, and with the demand for network usage increasing, packet loss isn’t going to go anywhere. When you have a modern packet solution, paired with Vyopta’s analytics and monitoring solution, you’re setting your company up to uncover and analyze how much packet loss you’re experiencing and when. It’s no longer a guessing game of we had packet loss, but not sure when and what that has to do with our patterns in the company. By taking the next steps to implement a modern packet loss solution and taking the further step of implementing Vyopta, organizations will start to see that packet loss standard is much higher in the long run.

Learn more about how Vyopta’s Analytics solution helps navigate through the dread of packet loss.